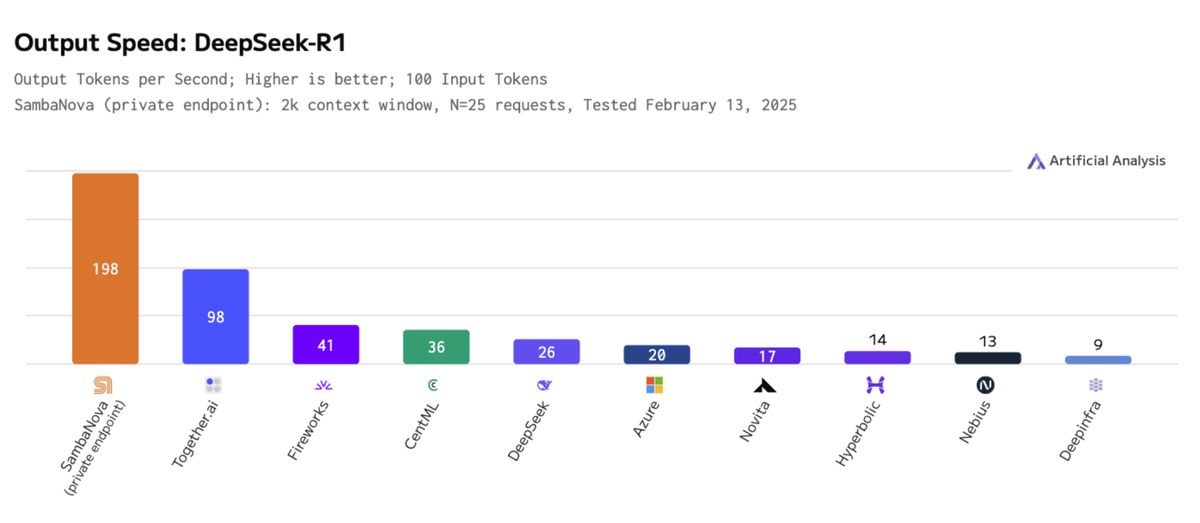

SambaNova runs DeepSeek-R1 at 198 tokens/sec utilizing 16 {custom} chips

The SN40L RDU chip is reportedly 3X quicker, 5X extra environment friendly than GPUs

5X velocity enhance is promised quickly, with 100X capability by year-end on cloud

Chinese language AI upstart DeepSeek has in a short time made a reputation for itself in 2025, with its R1 large-scale open supply language mannequin, constructed for superior reasoning duties, displaying efficiency on par with the business’s high fashions, whereas being extra cost-efficient.

SambaNova Programs, an AI startup based in 2017 by specialists from Solar/Oracle and Stanford College, has now introduced what it claims is the world’s quickest deployment of the DeepSeek-R1 671B LLM up to now.

The corporate says it has achieved 198 tokens per second, per consumer, utilizing simply 16 custom-built chips, changing the 40 racks of 320 Nvidia GPUs that will usually be required.

Independently verified

“Powered by the SN40L RDU chip, SambaNova is the fastest platform running DeepSeek,” mentioned Rodrigo Liang, CEO and co-founder of SambaNova. “This will increase to 5X faster than the latest GPU speed on a single rack – and by year-end, we will offer 100X capacity for DeepSeek-R1.”

Whereas Nvidia’s GPUs have historically powered giant AI workloads, SambaNova argues that its reconfigurable dataflow structure gives a extra environment friendly answer. The corporate claims its {hardware} delivers thrice the velocity and 5 occasions the effectivity of main GPUs whereas sustaining the total reasoning energy of DeepSeek-R1.

“DeepSeek-R1 is one of the most advanced frontier AI models available, but its full potential has been limited by the inefficiency of GPUs,” mentioned Liang. “That changes today. We’re bringing the next major breakthrough – collapsing inference costs and reducing hardware requirements from 40 racks to just one – to offer DeepSeek-R1 at the fastest speeds, efficiently.”

George Cameron, co-founder of AI evaluating agency Synthetic Evaluation, mentioned his firm had “independently benchmarked SambaNova’s cloud deployment of the full 671 billion parameter DeepSeek-R1 Mixture of Experts model at over 195 output tokens/s, the fastest output speed we have ever measured for DeepSeek-R1. High output speeds are particularly important for reasoning models, as these models use reasoning output tokens to improve the quality of their responses. SambaNova’s high output speeds will support the use of reasoning models in latency-sensitive use cases.”

DeepSeek-R1 671B is now obtainable on SambaNova Cloud, with API entry supplied to pick customers. The corporate is scaling capability quickly, and says it hopes to succeed in 20,000 tokens per second of whole rack throughput “in the near future”.

(Picture credit score: Synthetic Evaluation)

You may additionally like