Have you ever Googled one thing just lately solely to be met with a cute little diamond emblem above some magically-appearing phrases? Google’s AI Overview combines Google Gemini’s language fashions (which generate the responses) with Retrieval-Augmented Era, which pulls the related info.

In idea, it’s made an unbelievable product, Google’s search engine, even simpler and quicker to make use of.

Nonetheless, as a result of the creation of those summaries is a two-step course of, points can come up when there’s a disconnect between the retrieval and the language technology.

It’s possible you’ll like

Whereas the retrieved info could be correct, the AI could make misguided leaps and draw unusual conclusions when producing the abstract.

(Picture credit score: Google)

That’s led to some well-known gaffs, reminiscent of when it grew to become the laughing inventory of the web in mid-2024 for recommending glue as a manner to ensure cheese wouldn’t slide off your selfmade pizza. And we beloved the time it described operating with scissors as “a cardio exercise that can improve your heart rate and require concentration and focus”.

These prompted Liz Reid, Head of Google Search, to publish an article titled About Final Week, stating these examples “highlighted some specific areas that we needed to improve”. Greater than that, she diplomatically blamed “nonsensical queries” and “satirical content”.

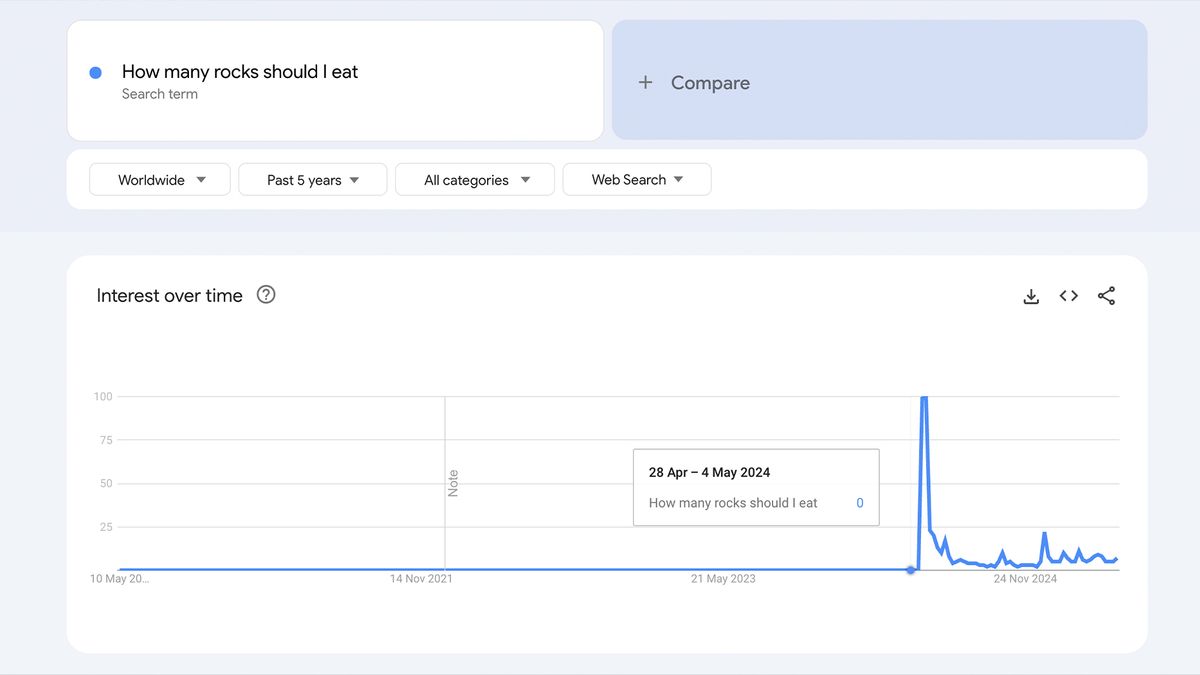

She was at the least partly proper. A few of the problematic queries had been purely highlighted within the pursuits of creating AI look silly. As you possibly can see beneath, the question “How many rocks should I eat?” wasn’t a standard search earlier than the introduction of AI Overviews, and it hasn’t been since.

(Picture credit score: Google)

Nonetheless, virtually a 12 months on from the pizza-glue fiasco, individuals are nonetheless tricking Google’s AI Overviews into fabricating info or “hallucinating” – the euphemism for AI lies.

Many deceptive queries appear to be ignored as of writing, however simply final month it was reported by Engadget that the AI Overviews would make up explanations for faux idioms like “you can’t marry pizza” or “never rub a basset hound’s laptop”.

So, AI is usually flawed once you deliberately trick it. Massive deal. However, now that it’s being utilized by billions and consists of crowd-sourced medical recommendation, what occurs when a real query causes it to hallucinate?

Whereas AI works splendidly if everybody who makes use of it examines the place it sourced its info from, many individuals – if not most individuals – aren’t going to try this.

And therein lies the important thing drawback. As a author, Overviews are already inherently a bit annoying as a result of I wish to learn human-written content material. However, even placing my pro-human bias apart, AI turns into severely problematic if it’s so simply untrustworthy. And it’s grow to be arguably downright harmful now that it’s mainly ubiquitous when looking, and a sure portion of customers are going to take its information at face worth.

I imply, years of looking has educated us all to belief the outcomes on the prime of the web page.

Wait… is that’s true?

(Picture credit score: Future)

Like many individuals, I can generally wrestle with change. I didn’t prefer it when LeBron went to the Lakers and I caught with an MP3 participant over an iPod for manner too lengthy.

Nonetheless, given it’s now the very first thing I see on Google more often than not, Google’s AI Overviews are slightly tougher to disregard.

I’ve tried utilizing it like Wikipedia – doubtlessly unreliable, however good for reminding me of forgotten information or for studying concerning the fundamentals of a subject that gained’t trigger me any agita if it’s not 100% correct.

But, even on seemingly easy queries it may fail spectacularly. For example, I used to be watching a film the opposite week and this man actually seemed like Lin-Manuel Miranda (creator of the musical Hamilton), so I Googled whether or not he had any brothers.

The AI overview knowledgeable me that “Yes, Lin-Manuel Miranda has two younger brothers named Sebastián and Francisco.”

For a couple of minutes I believed I used to be a genius at recognising individuals… till slightly little bit of additional analysis confirmed that Sebastián and Francisco are literally Miranda’s two youngsters.

Wanting to offer it the good thing about the doubt, I figured that it will don’t have any subject itemizing quotes from Star Wars to assist me consider a headline.

Luckily, it gave me precisely what I wanted. “Hello there!” and “It’s a trap!”, and it even quoted “No, I am your father” versus the too-commonly-repeated “Luke, I am your father”.

Together with these professional quotes, nonetheless, it claimed Anakin had stated “If I go, I go with a bang” earlier than his transformation into Darth Vader.

I used to be shocked at the way it might be so flawed… after which I began second-guessing myself. I gaslit myself into considering I should be mistaken. I used to be so not sure that I triple checked the quote’s existence and shared it with the workplace – the place it was shortly (and accurately) dismissed as one other bout of AI lunacy.

This little piece of self-doubt, about one thing as foolish as Star Wars scared me. What if I had no data a couple of subject I used to be asking about?

This examine by SE Rating truly exhibits Google’s AI Overviews avoids (or cautiously responds to) matters of finance, politics, well being and regulation. This implies Google is aware of that its AI isn’t as much as the duty of extra severe queries simply but.

However what occurs when Google thinks it’s improved to the purpose that it may?

It’s the tech… but additionally how we use it

(Picture credit score: Google)

If everybody utilizing Google might be trusted to double test the AI outcomes, or click on into the supply hyperlinks offered by the overview, its inaccuracies wouldn’t be a difficulty.

However, so long as there may be a better possibility – a extra frictionless path – individuals are inclined to take it.

Regardless of having extra info at our fingertips than at any earlier time in human historical past, in lots of nations our literacy and numeracy abilities are declining. Living proof, a 2022 examine discovered that simply 48.5% of People report having learn at the least one e book within the earlier 12 months.

It’s not the know-how itself that’s the difficulty. As is eloquently argued by Affiliate Professor Grant Blashki, how we use the know-how (and certainly, how we’re steered in the direction of utilizing it) is the place issues come up.

For instance, an observational examine by researchers at Canada’s McGill College discovered that common use of GPS may end up in worsened spatial reminiscence – and an incapability to navigate by yourself. I can’t be the one one which’s used Google Maps to get someplace and had no concept how one can get again.

Neuroscience has clearly demonstrated that struggling is sweet for the mind. Cognitive Load Principle states that your mind wants to consider issues to study. It’s onerous to think about struggling an excessive amount of once you search a query, learn the AI abstract after which name it a day.

Make the selection to assume

(Picture credit score: Shutterstock)

I’m not committing to by no means utilizing GPS once more, however given Google’s AI Overviews are usually untrustworthy, I’d eliminate AI Overviews if I may. Nonetheless, there’s sadly no such technique for now.

Even hacks like including a cuss phrase to your question not work. (And whereas utilizing the F-word nonetheless appears to work more often than not, it additionally makes for weirder and extra, uh, ‘adult-oriented’ search outcomes that you simply’re in all probability not searching for.)

In fact, I’ll nonetheless use Google – as a result of it’s Google. It’s not going to reverse its AI ambitions anytime quickly, and whereas I may want for it to revive the choice to opt-out of AI Overviews, possibly it’s higher the satan you already know.

Proper now, the one true defence in opposition to AI misinformation is to make a concerted effort to not use it. Let it take notes of your work conferences or assume up some pick-up traces, however with regards to utilizing it as a supply of data, I’ll be scrolling previous it and looking for a top quality human-authored (or at the least checked) article from the highest outcomes – as I’ve finished for practically my complete existence.

I discussed beforehand that sooner or later these AI instruments would possibly genuinely grow to be a dependable supply of data. They could even be good sufficient to tackle politics. However at this time isn’t that day.

Actually, as reported on Could 5 by the New York Instances, as Google and ChatGPT’s AI instruments grow to be extra highly effective, they’re additionally turning into more and more unreliable – so I’m unsure I’ll ever be trusting them to summarise any political candidate’s insurance policies.

When testing the hallucination charge of those ‘reasoning systems’, the very best recorded hallucination charge was a whopping 79%. Amr Awadalla, the chief govt of Vectara – an AI Agent and Assistant platform for enterprises – put it bluntly: “Despite our best efforts, they will always hallucinate.”

You may additionally like…